W&B Inference

W&B Inference provides access to leading open-source foundation models via W&B Weave and an OpenAI-compliant API. With W&B Inference, you can:

- Develop AI applications and agents without signing up for a hosting provider or self-hosting a model.

- Try the supported models in the W&B Weave Playground.

W&B Inference credits are included with Free, Pro, and Academic plans for a limited time. Availability may vary for Enterprise. Once credits are consumed:

- Free accounts must upgrade to a Pro plan to continue using Inference.

- Pro plan users will be billed for Inference overages on a monthly basis, based on the model-specific pricing.

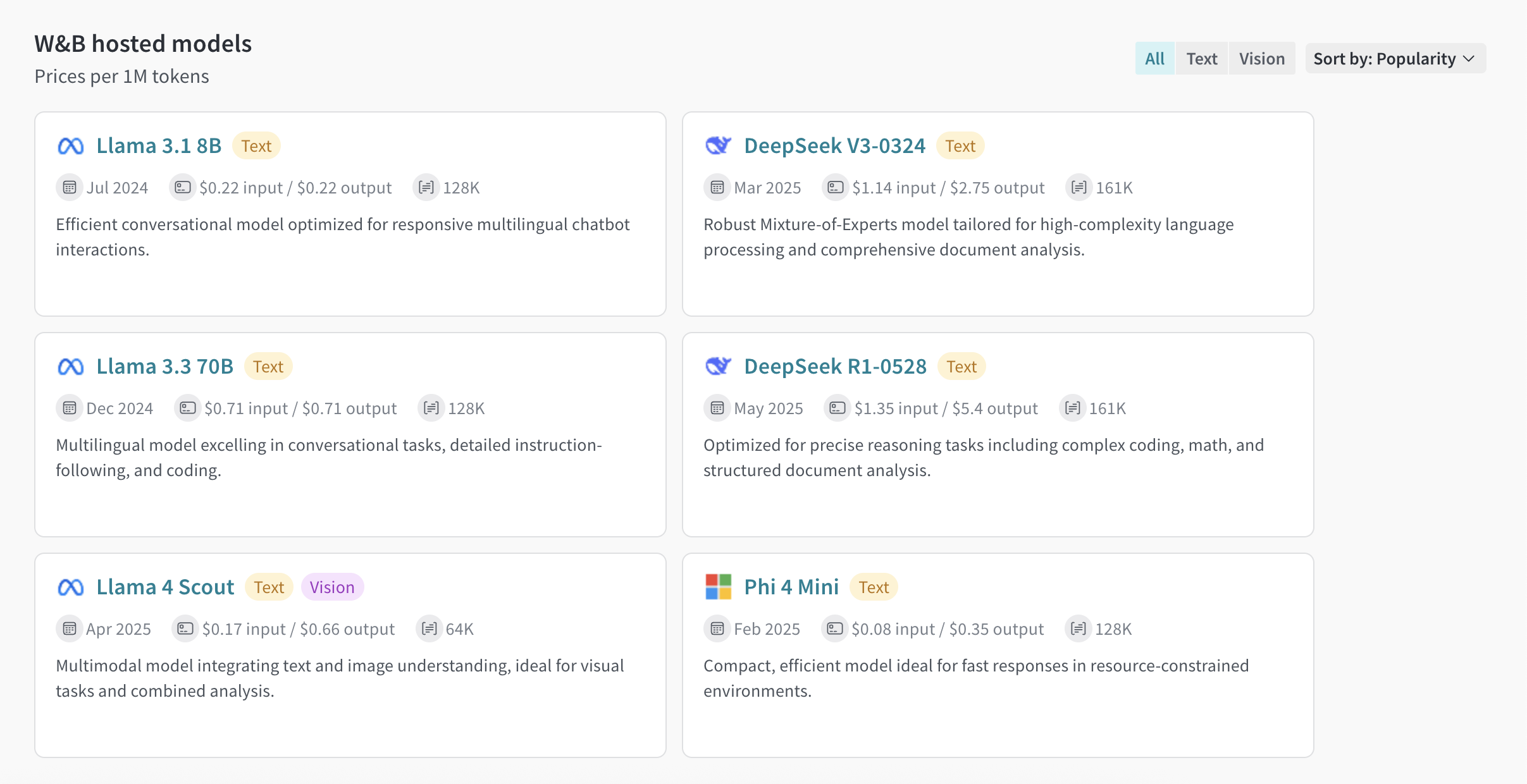

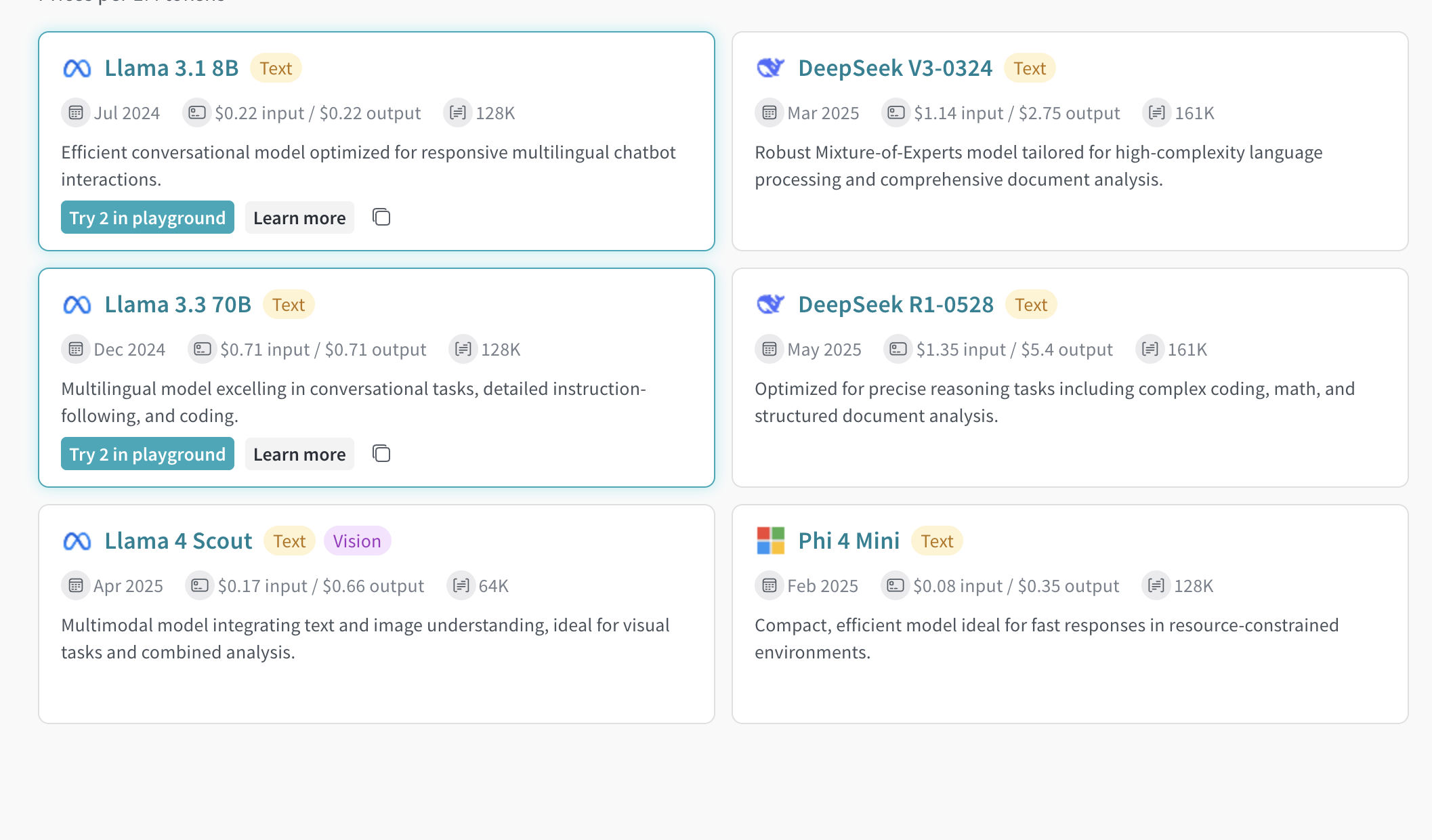

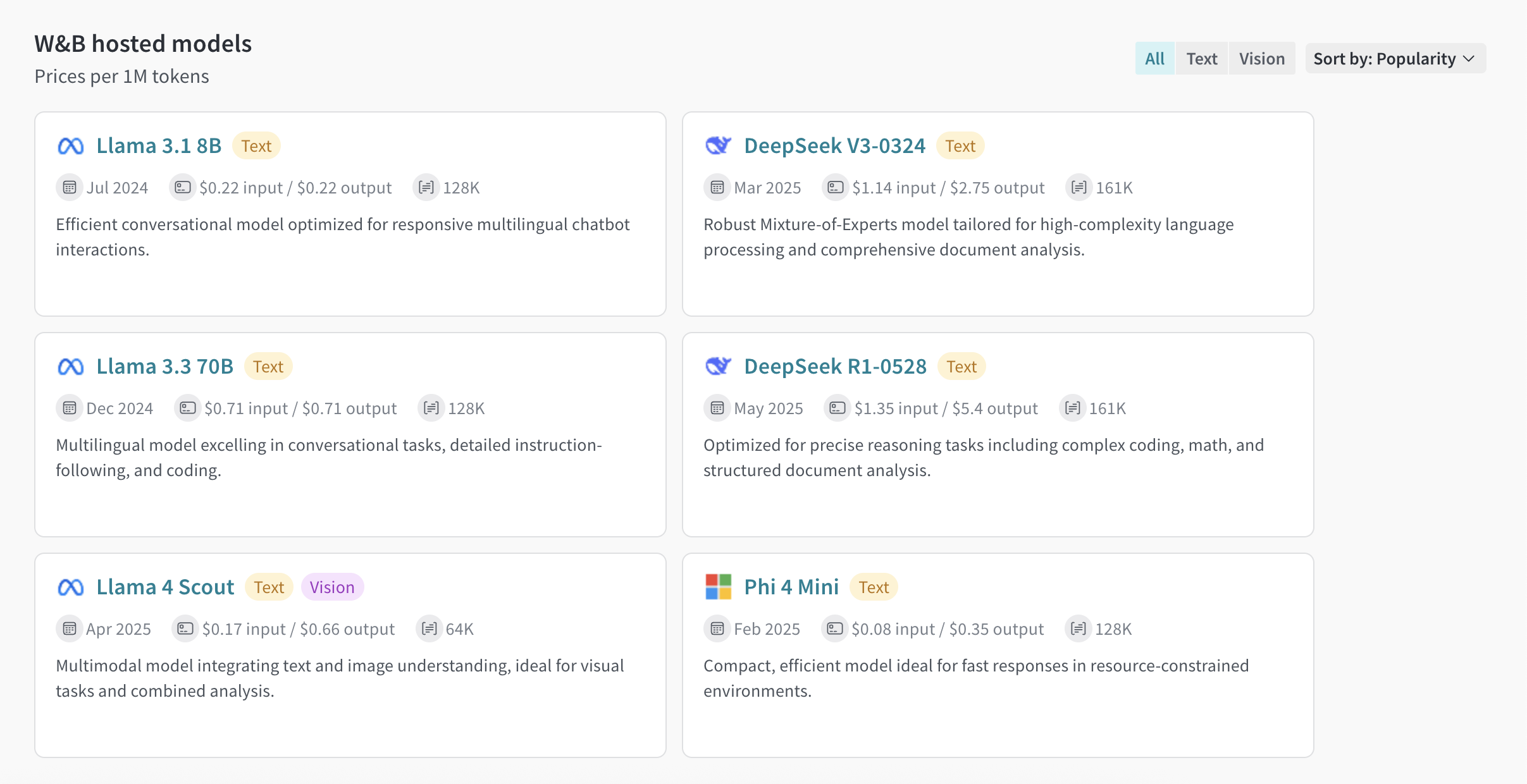

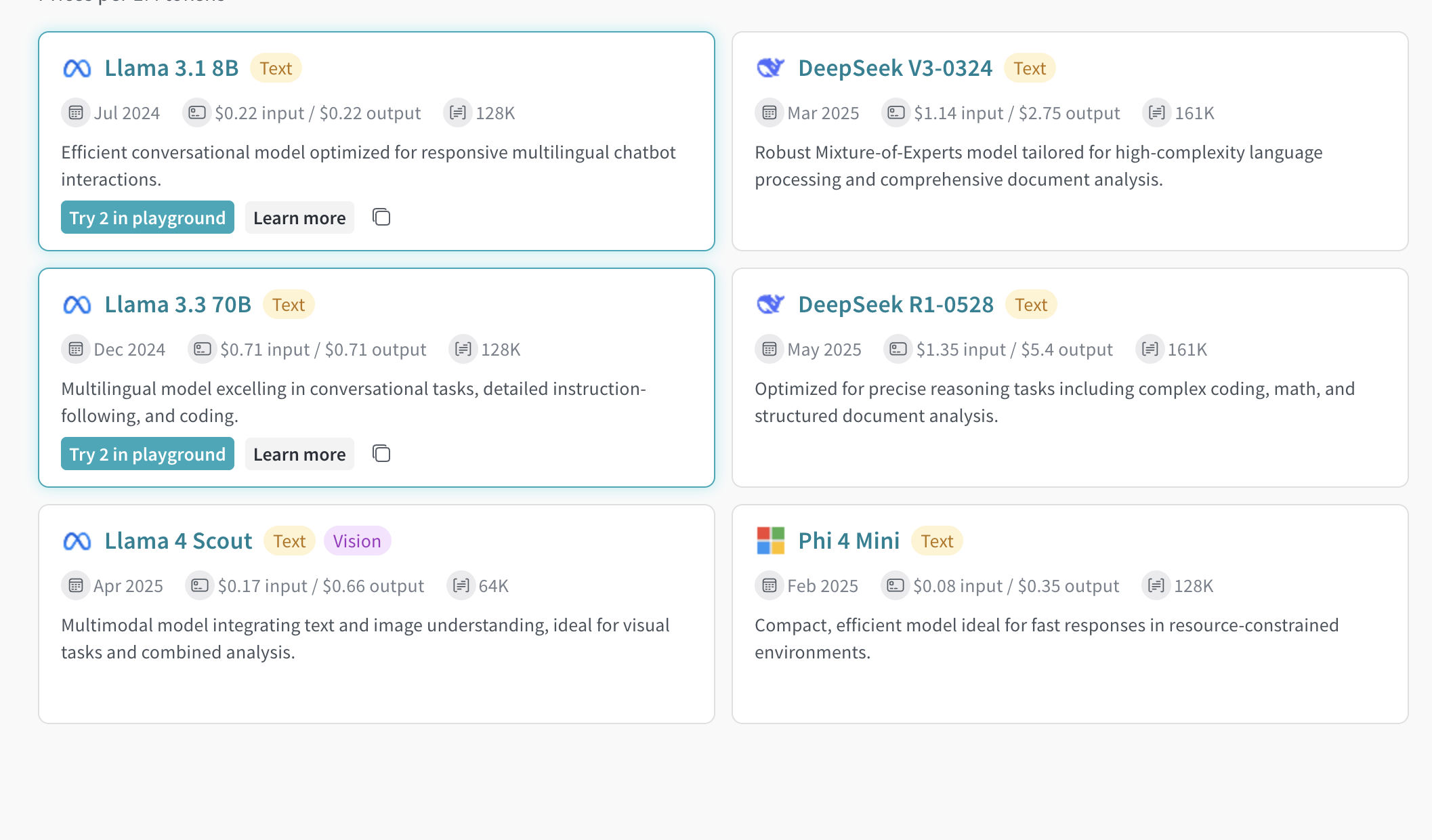

To learn more, see the pricing page and W&B Inference model costs. | Model | Model ID (for API usage) | Type(s) | Context Window | Parameters | Description |

|---|

| DeepSeek R1-0528 | deepseek-ai/DeepSeek-R1-0528 | Text | 161K | 37B - 680B (Active - Total) | Optimized for precise reasoning tasks including complex coding, math, and structured document analysis. |

| DeepSeek V3-0324 | deepseek-ai/DeepSeek-V3-0324 | Text | 161K | 37B - 680B (Active - Total) | Robust Mixture-of-Experts model tailored for high-complexity language processing and comprehensive document analysis. |

| Llama 3.1 8B | meta-llama/Llama-3.1-8B-Instruct | Text | 128K | 8B (Total) | Efficient conversational model optimized for responsive multilingual chatbot interactions. |

| Llama 3.3 70B | meta-llama/Llama-3.3-70B-Instruct | Text | 128K | 70B (Total) | Multilingual model excelling in conversational tasks, detailed instruction-following, and coding. |

| Llama 4 Scout | meta-llama/Llama-4-Scout-17B-16E-Instruct | Text, Vision | 64K | 17B - 109B (Active - Total) | Multimodal model integrating text and image understanding, ideal for visual tasks and combined analysis. |

| Phi 4 Mini | microsoft/Phi-4-mini-instruct | Text | 128K | 3.8B (Active - Total) | Compact, efficient model ideal for fast responses in resource-constrained environments. |

Prerequisites

The following prerequisites are required to access the W&B Inference service via the API or the W&B Weave UI.

- A W&B account. Sign up here.

- A W&B API key. Get your API key at https://wandb.ai/authorize.

- A W&B project.

- If you are using the Inference service via Python, see Additional prerequisites for using the API via Python.

Additional prerequisites for using the API via Python

To use the Inference API via Python, first complete the general prerequisites. Then, install the openai and weave libraries in your local environment:

The weave library is only required if you’ll be using Weave to trace your LLM applications. For information on getting started with Weave, see the Weave Quickstart.For usage examples demonstrating how to use the W&B Inference service with Weave, see the API usage examples. API specification

The following section provides API specification information and API usage examples.

Endpoint

The Inference service can be accessed via the following endpoint:

https://api.inference.wandb.ai/v1

To access this endpoint, you must have a W&B account with Inference service credits allocated, a valid W&B API key, and a W&B entity (also referred to as “team”) and project. In the code samples in this guide, entity (team) and project are referred to as <your-team>\<your-project>.

Available methods

The Inference service supports the following API methods:

Chat completions

The primary API method available is /chat/completions, which supports OpenAI-compatible request formats for sending messages to a supported model and receiving a completion. For usage examples demonstrating how to use the W&B Inference service with Weave, see the API usage examples.

To create a chat completion, you will need:

- The Inference service base URL

https://api.inference.wandb.ai/v1

- Your W&B API key

<your-api-key>

- Your W&B entity and project names

<your-team>/<your-project>

- The ID for the model you want to use, one of:

meta-llama/Llama-3.1-8B-Instructdeepseek-ai/DeepSeek-V3-0324meta-llama/Llama-3.3-70B-Instructdeepseek-ai/DeepSeek-R1-0528meta-llama/Llama-4-Scout-17B-16E-Instructmicrosoft/Phi-4-mini-instruct

curl https://api.inference.wandb.ai/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer <your-api-key>" \

-H "OpenAI-Project: <your-team>/<your-project>" \

-d '{

"model": "<model-id>",

"messages": [

{ "role": "system", "content": "You are a helpful assistant." },

{ "role": "user", "content": "Tell me a joke." }

]

}'

import openai

client = openai.OpenAI(

# The custom base URL points to W&B Inference

base_url='https://api.inference.wandb.ai/v1',

# Get your API key from https://wandb.ai/authorize

# Consider setting it in the environment as OPENAI_API_KEY instead for safety

api_key="<your-api-key>",

# Team and project are required for usage tracking

project="<your-team>/<your-project>",

)

# Replace <model-id> with any of the following values:

# meta-llama/Llama-3.1-8B-Instruct

# deepseek-ai/DeepSeek-V3-0324

# meta-llama/Llama-3.3-70B-Instruct

# deepseek-ai/DeepSeek-R1-0528

# meta-llama/Llama-4-Scout-17B-16E-Instruct

# microsoft/Phi-4-mini-instruct

response = client.chat.completions.create(

model="<model-id>",

messages=[

{"role": "system", "content": "<your-system-prompt>"},

{"role": "user", "content": "<your-prompt>"}

],

)

print(response.choices[0].message.content)

List supported models

Use the API to query all currently available models and their IDs. This is useful for selecting models dynamically or inspecting what’s available in your environment.

curl https://api.inference.wandb.ai/v1/models \

-H "Content-Type: application/json" \

-H "Authorization: Bearer <your-api-key>" \

-H "OpenAI-Project: <your-team>/<your-project>" \

import openai

client = openai.OpenAI(

base_url="https://api.inference.wandb.ai/v1",

api_key="<your-api-key>",

project="<your-team>/<your-project>"

)

response = client.models.list()

for model in response.data:

print(model.id)

Usage examples

This section provides several examples demonstrating how to use W&B Inference with Weave:

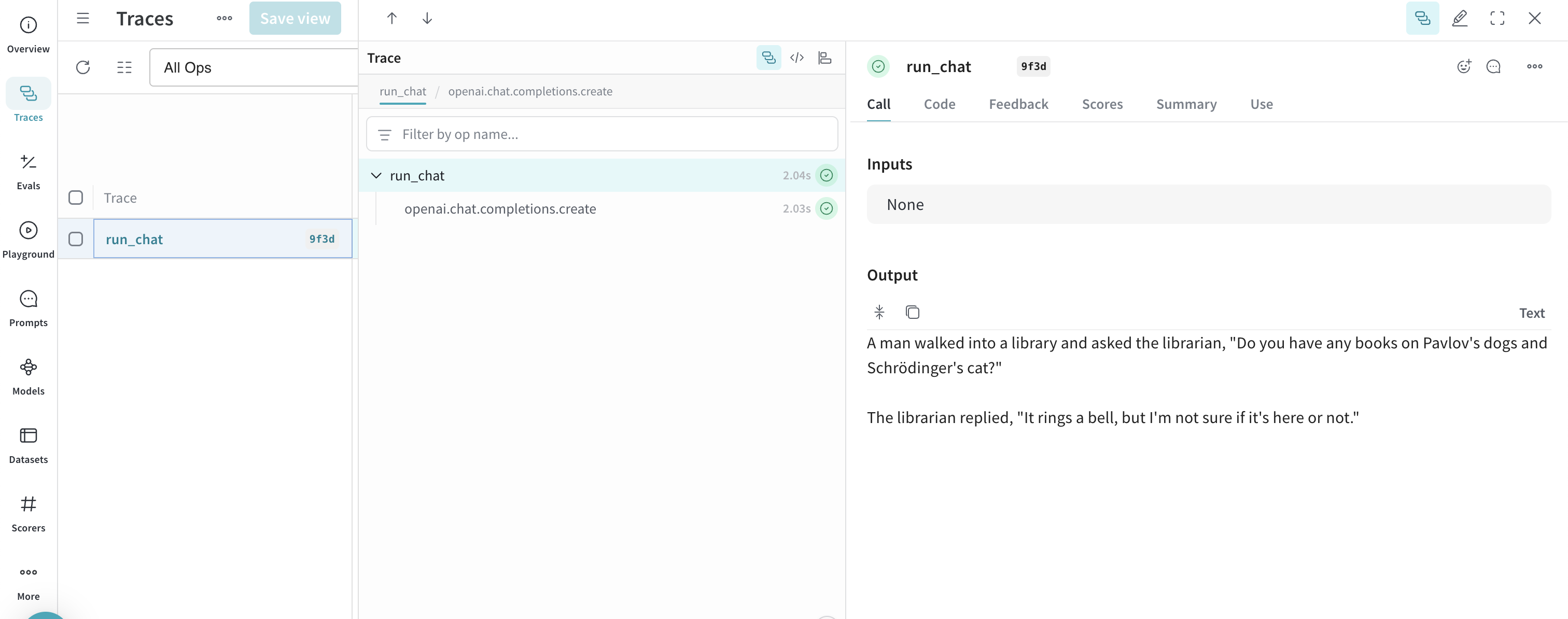

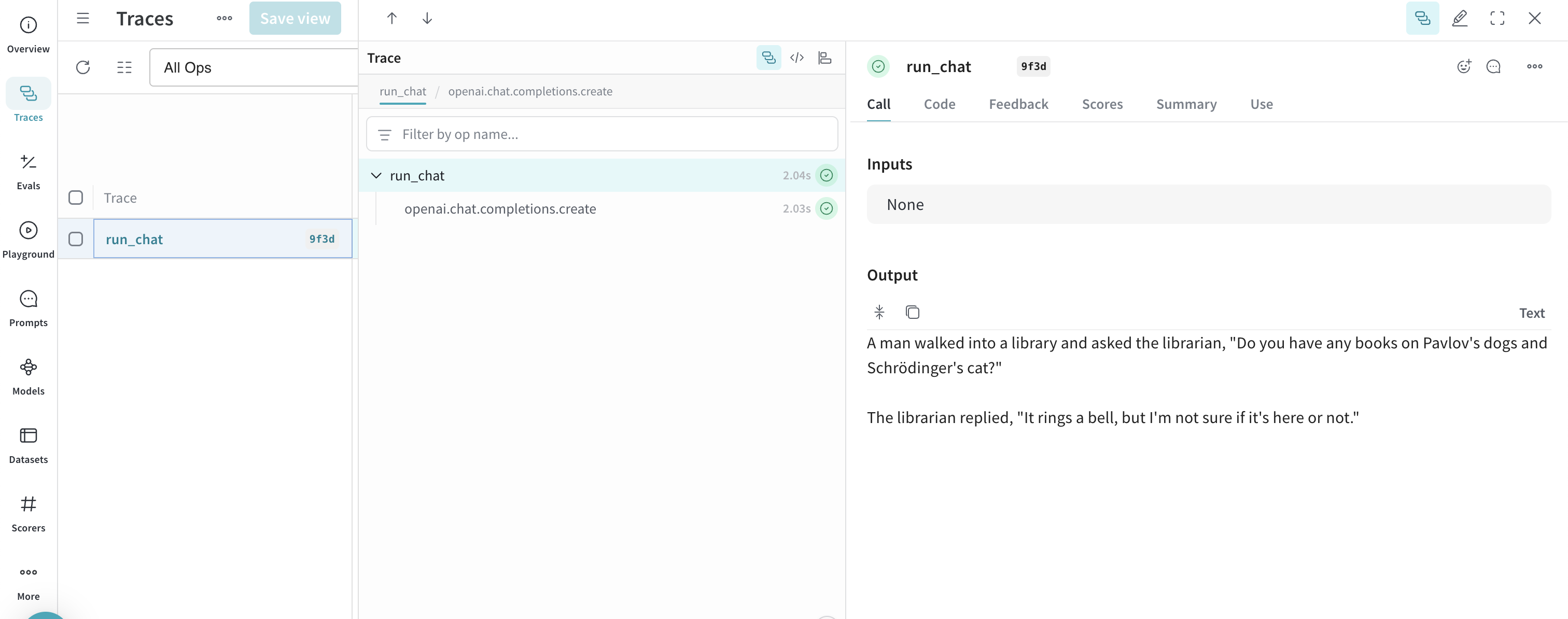

Basic example: Trace Llama 3.1 8B with Weave

The following Python code sample shows how to send a prompt to the Llama 3.1 8B model using the W&B Inference API and trace the call in Weave. Tracing lets you capture the full input/output of the LLM call, monitor performance, and analyze results in the Weave UI.

In this example:

- You define a

@weave.op()-decorated function, run_chat, which makes a chat completion request using the OpenAI-compatible client.

- Your traces are recorded and associated with your W&B entity and project

project="<your-team>/<your-project>

- The function is automatically traced by Weave, so its inputs, outputs, latency, and metadata (like model ID) are logged.

- The result is printed in the terminal, and the trace appears in your Traces tab at https://wandb.ai under the specified project.

To use this example, you must complete the general prerequisites and Additional prerequisites for using the API via Python.

import weave

import openai

# Set the Weave team and project for tracing

weave.init("<your-team>/<your-project>")

client = openai.OpenAI(

base_url='https://api.inference.wandb.ai/v1',

# Get your API key from https://wandb.ai/authorize

api_key="<your-api-key>",

# Required for W&B inference usage tracking

project="wandb/inference-demo",

)

# Trace the model call in Weave

@weave.op()

def run_chat():

response = client.chat.completions.create(

model="meta-llama/Llama-3.1-8B-Instruct",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Tell me a joke."}

],

)

return response.choices[0].message.content

# Run and log the traced call

output = run_chat()

print(output)

https://wandb.ai/<your-team>/<your-project>/r/call/01977f8f-839d-7dda-b0c2-27292ef0e04g), or:

- Navigate to https://wandb.ai.

- Select the Traces tab to view your Weave traces.

Next, try the advanced example.

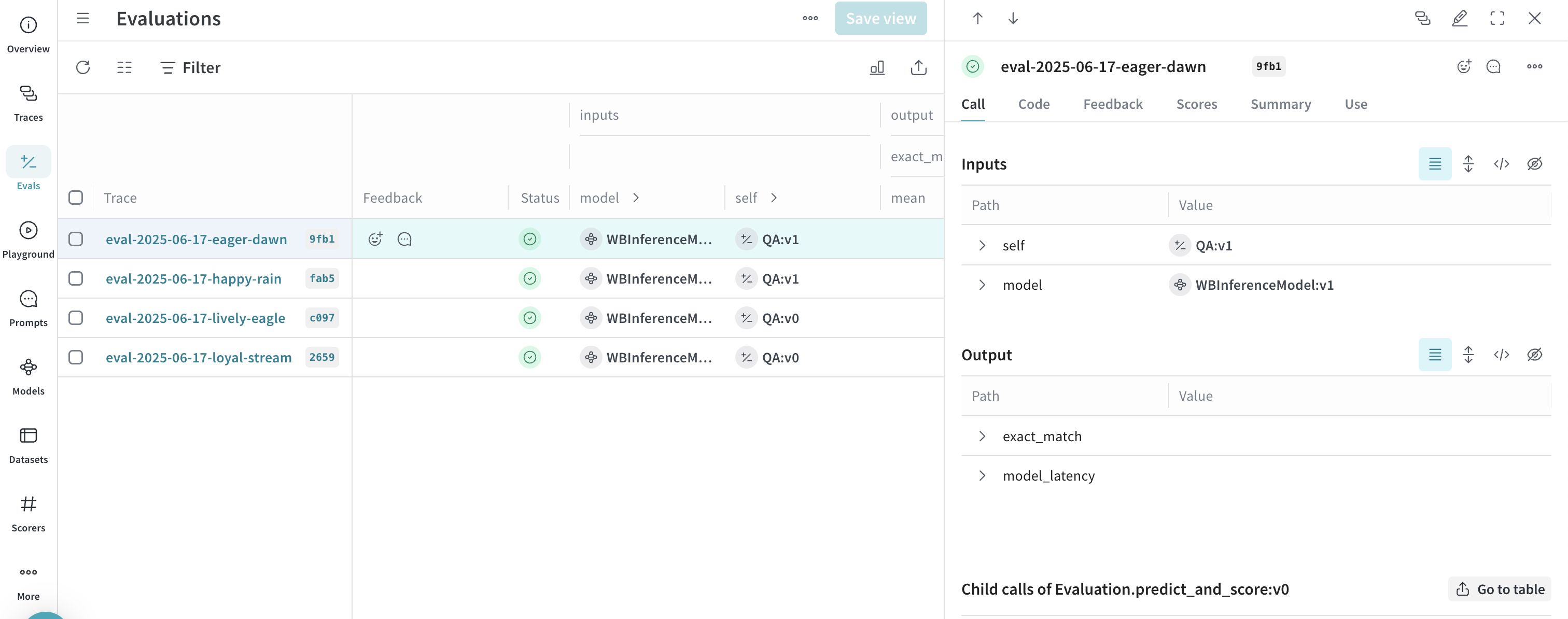

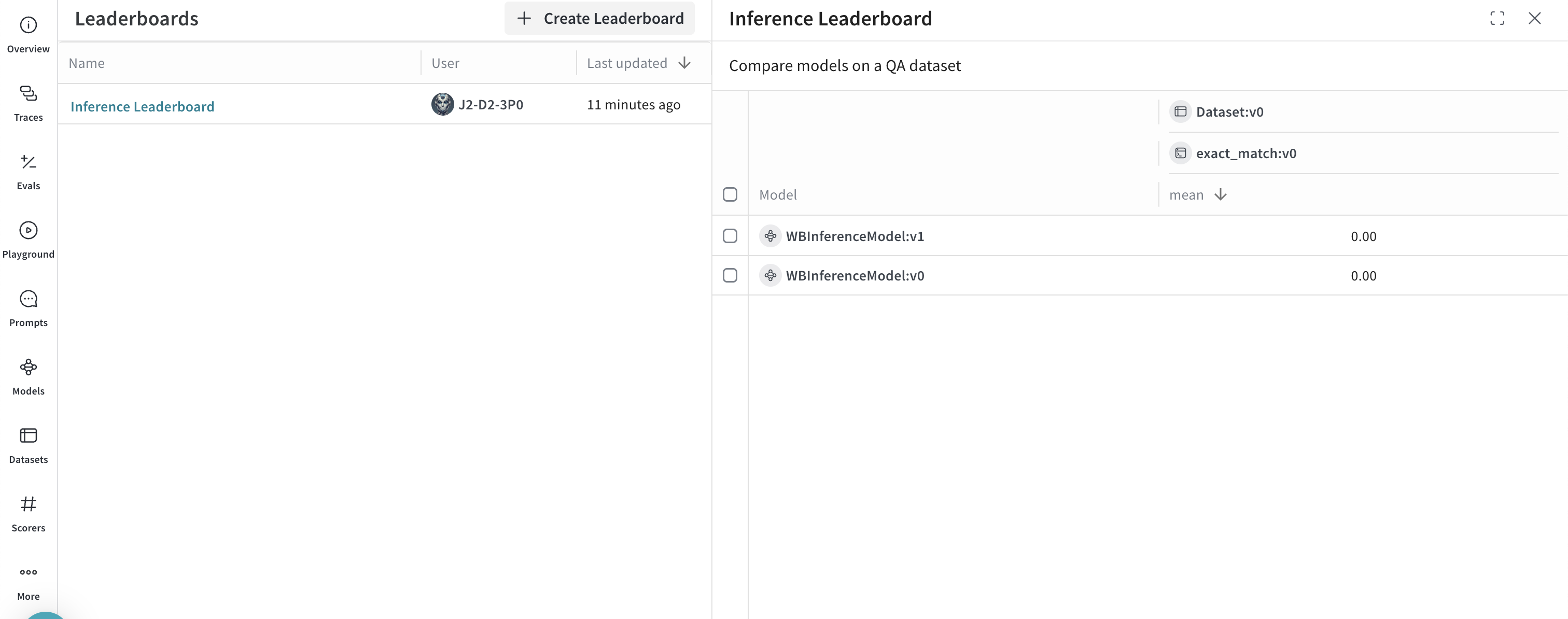

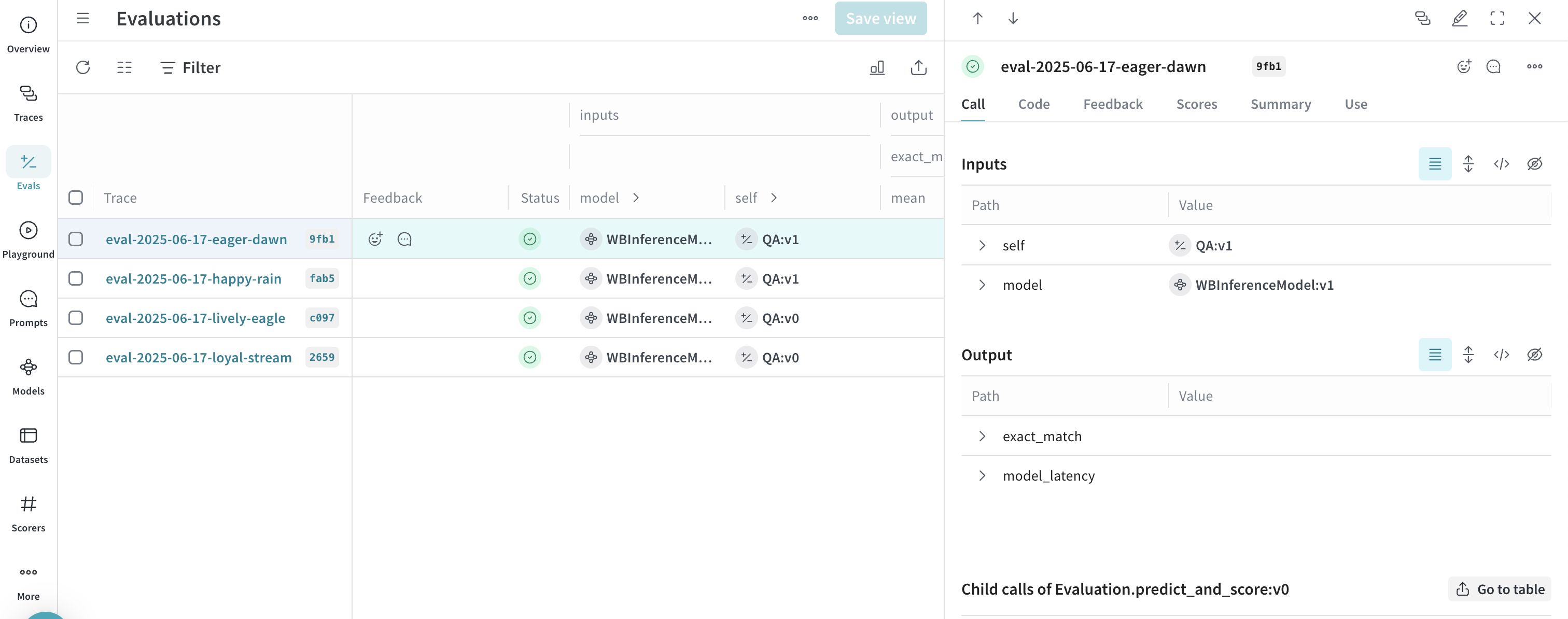

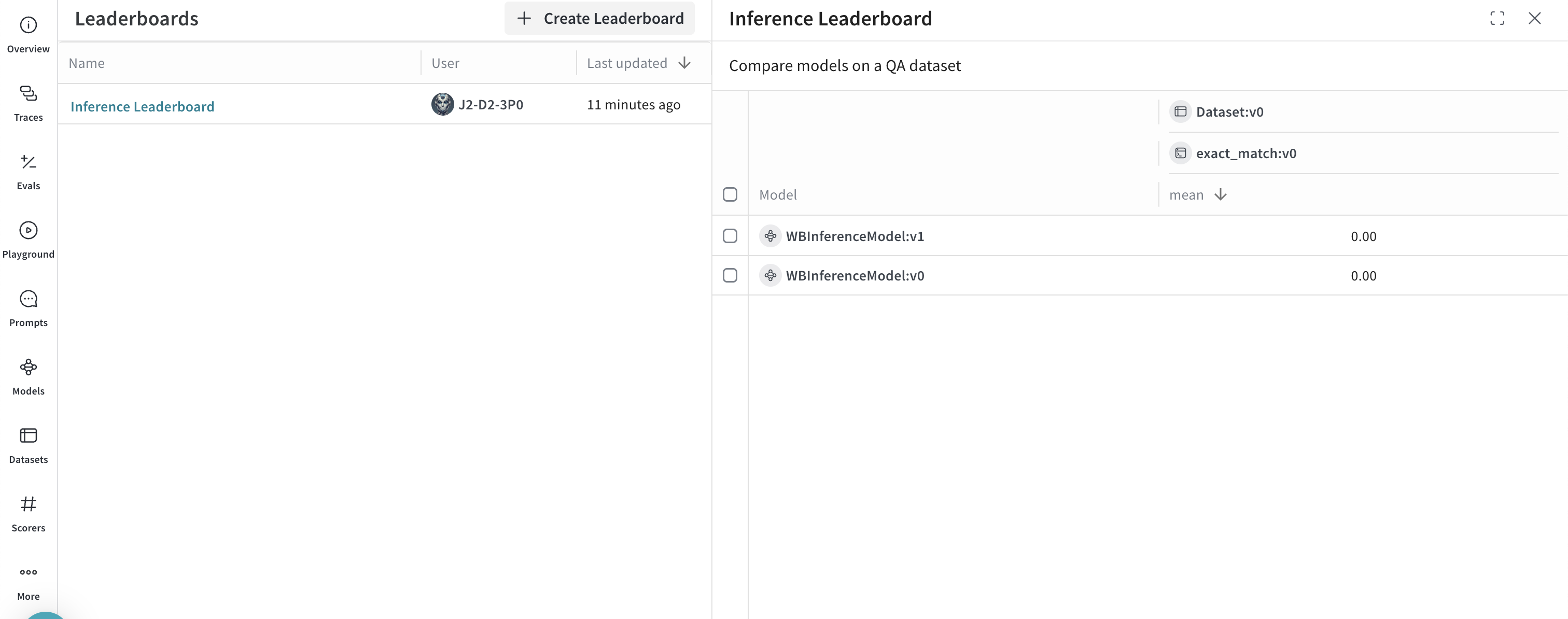

Advanced example: Use Weave Evaluations and Leaderboards with the inference service

In addition to using Weave with the Inference service to trace model calls, you can also evaluate performance, and publish a leaderboard. The following Python code sample compares two models on a simple question–answer dataset.

To use this example, you must complete the general prerequisites and Additional prerequisites for using the API via Python.

import os

import asyncio

import openai

import weave

from weave.flow import leaderboard

from weave.trace.ref_util import get_ref

# Set the Weave team and project for tracing

weave.init("<your-team>/<your-project>")

dataset = [

{"input": "What is 2 + 2?", "target": "4"},

{"input": "Name a primary color.", "target": "red"},

]

@weave.op

def exact_match(target: str, output: str) -> float:

return float(target.strip().lower() == output.strip().lower())

class WBInferenceModel(weave.Model):

model: str

@weave.op

def predict(self, prompt: str) -> str:

client = openai.OpenAI(

base_url="https://api.inference.wandb.ai/v1",

# Get your API key from https://wandb.ai/authorize

api_key="<your-api-key>",

# Required for W&B inference usage tracking

project="<your-team>/<your-project>",

)

resp = client.chat.completions.create(

model=self.model,

messages=[{"role": "user", "content": prompt}],

)

return resp.choices[0].message.content

llama = WBInferenceModel(model="meta-llama/Llama-3.1-8B-Instruct")

deepseek = WBInferenceModel(model="deepseek-ai/DeepSeek-V3-0324")

def preprocess_model_input(example):

return {"prompt": example["input"]}

evaluation = weave.Evaluation(

name="QA",

dataset=dataset,

scorers=[exact_match],

preprocess_model_input=preprocess_model_input,

)

async def run_eval():

await evaluation.evaluate(llama)

await evaluation.evaluate(deepseek)

asyncio.run(run_eval())

spec = leaderboard.Leaderboard(

name="Inference Leaderboard",

description="Compare models on a QA dataset",

columns=[

leaderboard.LeaderboardColumn(

evaluation_object_ref=get_ref(evaluation).uri(),

scorer_name="exact_match",

summary_metric_path="mean",

)

],

)

weave.publish(spec)

The following section describes how to use the Inference service from the W&B UI. Before you can access the Inference service via the UI, complete the prerequisites.

The following section describes how to use the Inference service from the W&B UI. Before you can access the Inference service via the UI, complete the prerequisites.

Access the Inference service

You can access the Inference service via the Weave UI from two different locations:

Direct link

Navigate to https://wandb.ai/inference.

From the Inference tab

- Navigate to your W&B account at https://wandb.ai/.

- From the left sidebar, select Inference. A page with available models and model information displays.

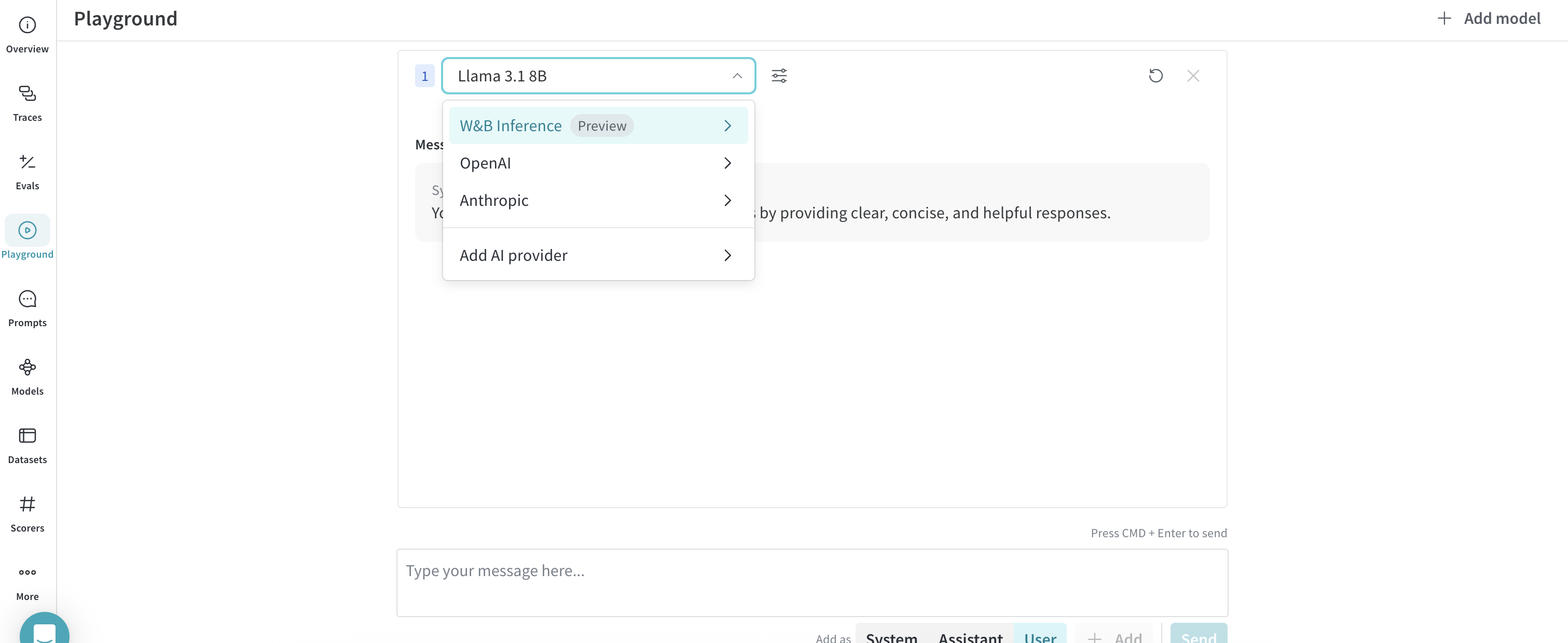

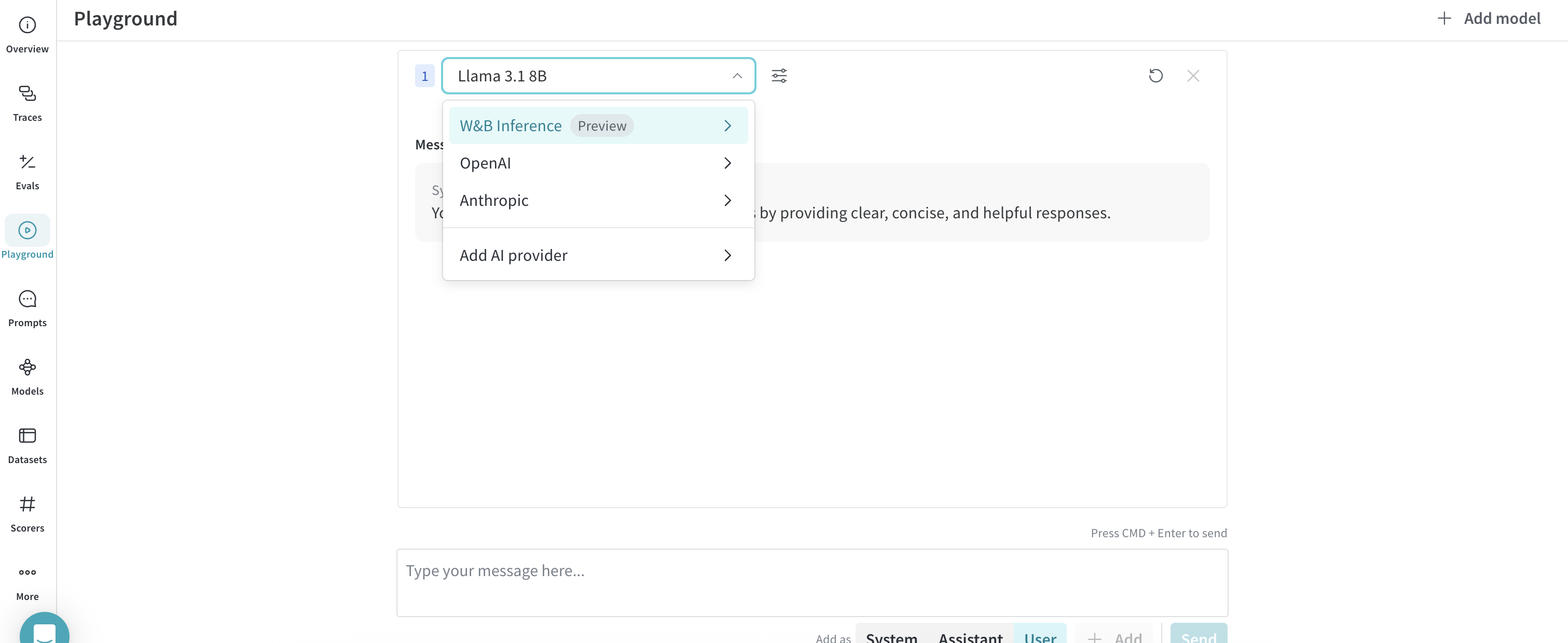

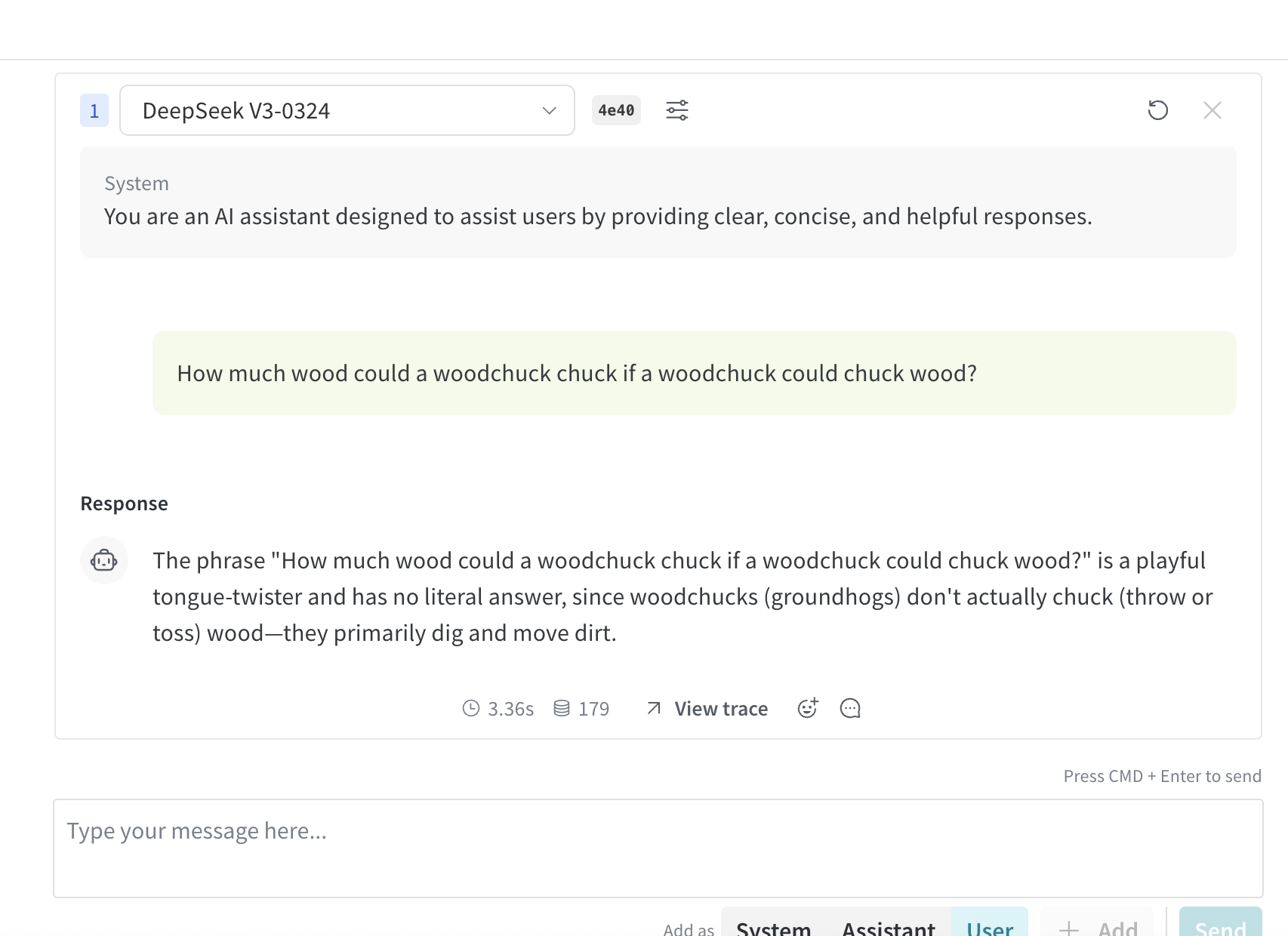

From the Playground tab

- From the left sidebar, select Playground. The Playground chat UI displays.

- From the LLM dropdown list, mouseover W&B Inference. A dropdown with available W&B Inference models displays to the right.

- From the W&B Inference models dropdown, you can:

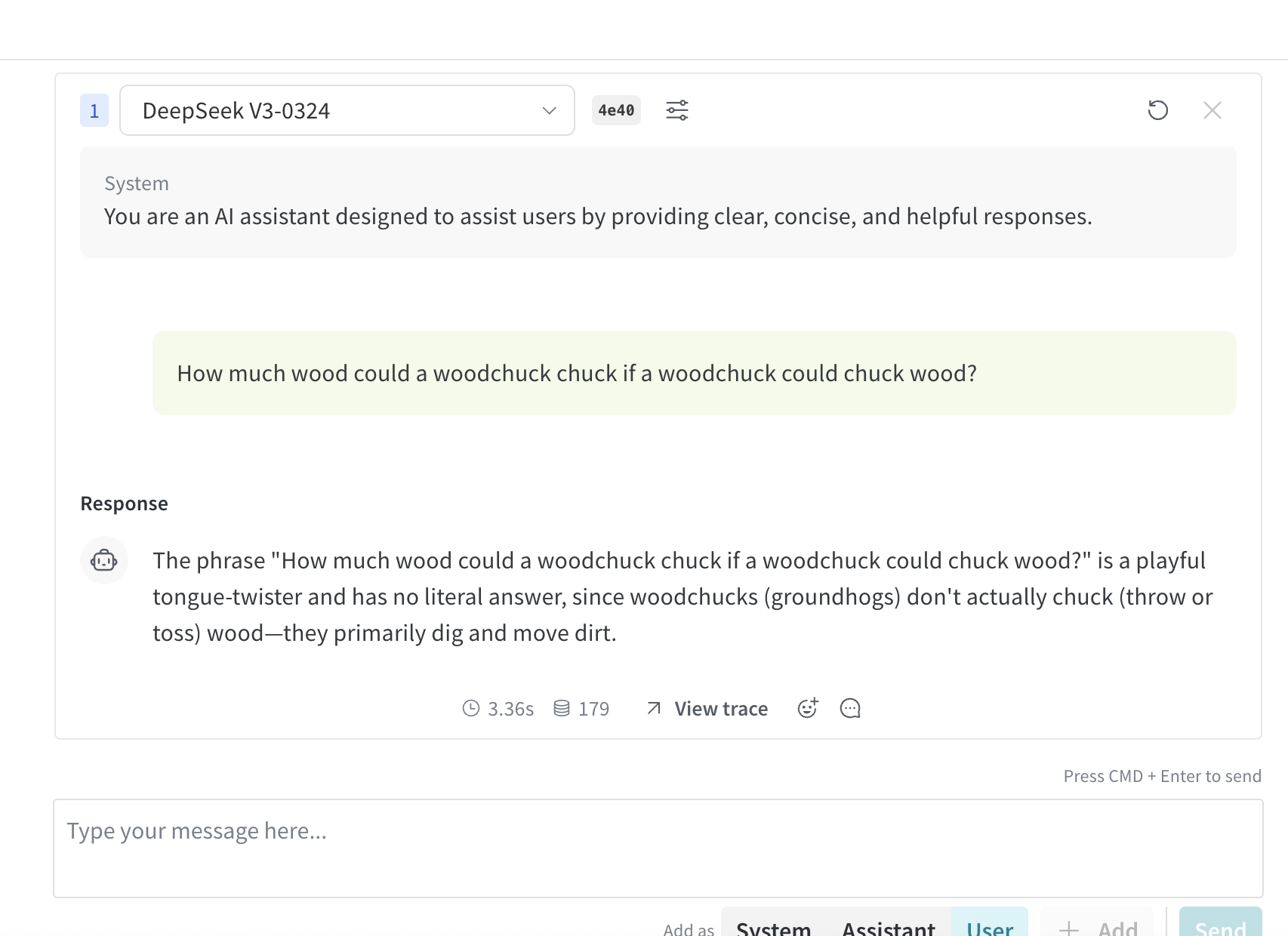

Try a model in the Playground

Once you’ve selected a model using one of the access options, you can try the model in Playground. The following actions are available:

Compare multiple models

You can compare multiple Inference models in the Playground. The Compare view can be accessed from two different locations:

Access the Compare view from the Inference tab

- From the left sidebar, select Inference. A page with available models and model information displays.

- To select models for comparison, click anywhere on a model card (except for the model name). The border of the model card is highlighted in blue to indicate the selection.

- Repeat step 2 for each model you want to compare.

- In any of the selected cards, click the Compare N models in the Playground button (

N is the number of models you are comparing. For example, when 3 models are selected, the button displays as Compare 3 models in the Playground). The comparison view opens.

Now, you can compare models in the Playground, and use any of the features described in Try a model in the Playground.

Access the Compare view from the Playground tab

- From the left sidebar, select Playground. The Playground chat UI displays.

- From the LLM dropdown list, mouseover W&B Inference. A dropdown with available W&B Inference models displays to the right.

- From the dropdown, select Compare. The Inference tab displays.

- To select models for comparison, click anywhere on a model card (except for the model name). The border of the model card is highlighted in blue to indicate the selection.

- Repeat step 4 for each model you want to compare.

- In any of the selected cards, click the Compare N models in the Playground button (

N is the number of models you are comparing. For example, when 3 models are selected, the button displays as Compare 3 models in the Playground). The comparison view opens.

Now, you can compare models in the Playground, and use any of the features described in Try a model in the Playground.

Organization admins can track current Inference credit balance, usage history, and upcoming billing (if applicable) directly from the W&B UI:

- In the W&B UI, navigate to the W&B Billing page.

- In the bottom righthand corner, the Inference billing information card is displayed. From here, you can:

- Click the View usage button in the Inference billing information card to view your usage over time.

- If you’re on a paid plan, view your upcoming inference charges.

The following section describes important usage information and limits. Familiarize yourself with this information before using the service.

Geographic restrictions

The Inference service is only accessible from supported geographic locations. For more information, see the Terms of Service.

Concurrency limits

To ensure fair usage and stable performance, the W&B Inference API enforces rate limits at the user and project level. These limits help:

- Prevent misuse and protect API stability

- Ensure access for all users

- Manage infrastructure load effectively

If a rate limit is exceeded, the API will return a 429 Concurrency limit reached for requests response. To resolve this error, reduce the number of concurrent requests.

Pricing

For model pricing information, visit https://wandb.ai/site/pricing/inference.

API errors

| Error Code | Message | Cause | Solution |

|---|

| 401 | Invalid Authentication | Invalid authentication credentials or your W&B project entity and/or name are incorrect. | Ensure the correct API key is being used and/or that your W&B project name and entity are correct. |

| 403 | Country, region, or territory not supported | Accessing the API from an unsupported location. | Please see Geographic restrictions |

| 429 | Concurrency limit reached for requests | Too many concurrent requests. | Reduce the number of concurrent requests. |

| 429 | You exceeded your current quota, please check your plan and billing details | Out of credits or reached monthly spending cap. | Purchase more credits or increase your limits. |

| 500 | The server had an error while processing your request | Internal server error. | Retry after a brief wait and contact support if it persists. |

| 503 | The engine is currently overloaded, please try again later | Server is experiencing high traffic. | Retry your request after a short delay. |